The Rise of Deepfakes: A Threat to All Women

Introduction

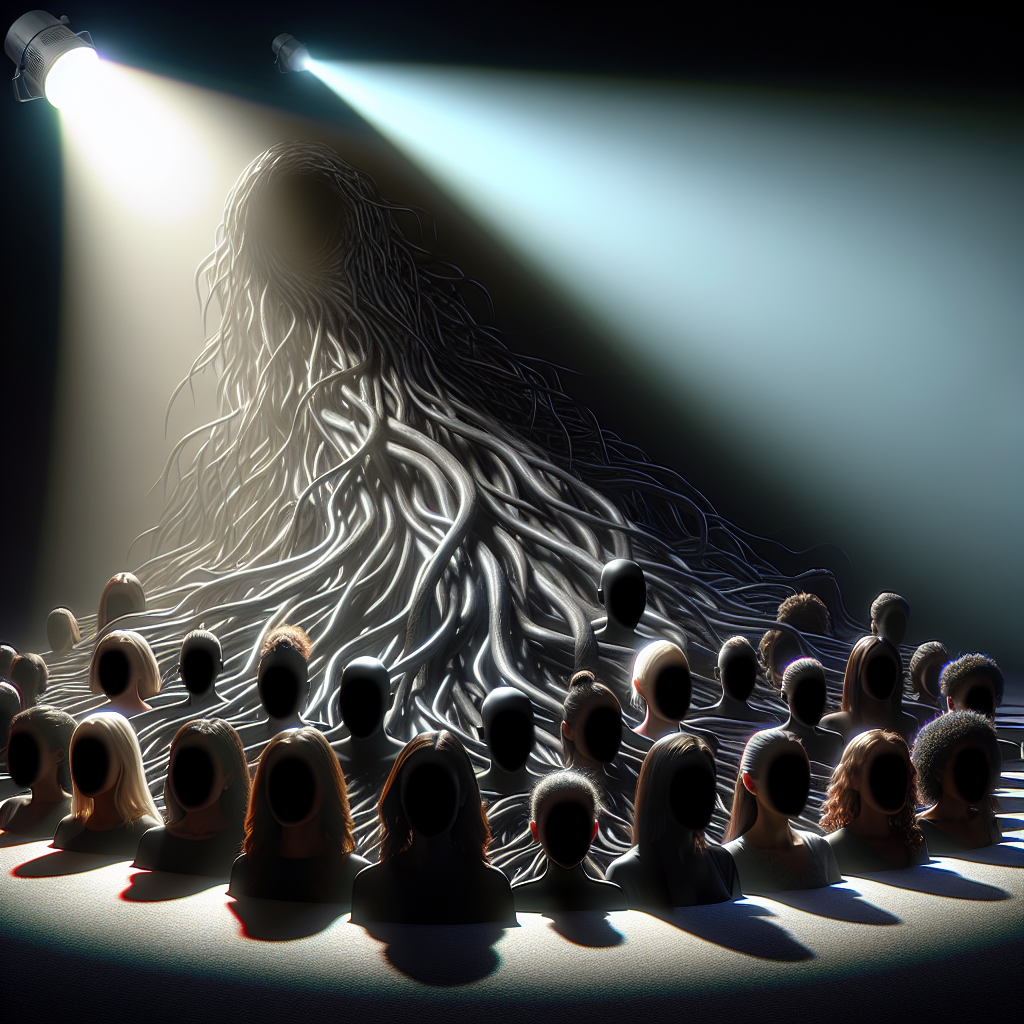

Deepfake technology has been on the rise, posing a significant threat to women worldwide. The recent incident involving Taylor Swift has brought attention to the alarming spread of nonconsensual deepfake pornography and the detrimental impact it has on its victims.

What are Deepfakes?

The Alliance for Universal Digital Rights defines deepfakes as synthetic media that digitally manipulate one person’s likeness with another’s. Deepfake pornography, which constitutes 98% of all deepfake videos online, is predominantly targeted at women, with 99% of victims being female.

Misogyny in Deepfake Pornography

- Deepfake porn is often used to silence women and intimidate those in the public eye.

- Women in authority positions, such as celebrities and politicians, are particularly vulnerable to deepfake abuse.

- High-profile cases, like Taylor Swift’s, can have a ripple effect, putting regular women at risk of online abuse.

The Impact on Women and Girls

Speaking out against deepfaking can make women targets of online harassment and threats. The technology poses a threat to all women and girls, affecting both their personal and professional lives.

Challenges and Legal Framework

Most countries lack adequate laws to address deepfake abuse effectively. In the US, only ten states have laws targeting explicit deepfake content. President Biden’s executive order on AI aims to address the risks of deepfake image-based sexual abuse but falls short of concrete federal action.

The Need for Cultural Change

Addressing the root cause of deepfake technology requires a shift in the cultural norms that enable the creation of harmful and nonconsensual content. Victims of deepfake abuse are often left to navigate complex reporting mechanisms and inadequate content moderation on tech platforms.

Conclusion

The rise of deepfakes presents a clear and present danger to all women. It is crucial to raise awareness, advocate for stronger legal protections, and work towards a cultural shift that respects the privacy and dignity of individuals.

If you have been a victim of nonconsensual image sharing, remember that support is available. Reach out to organizations like the Cyber Civil Rights Initiative for assistance.

For more updates and insights, follow Lucy Morgan on Instagram @lucyalexxandra.

This blog post originally appeared on a renowned fashion and lifestyle platform.

I think part of the reason so many men are loving these fake images of Taylor Swift being sexually assaulted is because it’s the humiliation and degradation of a strong and powerful woman that does it for them. They want to see her “put in her place”.

— Ellie Wilson (@ellieokwilson) January 25, 2024